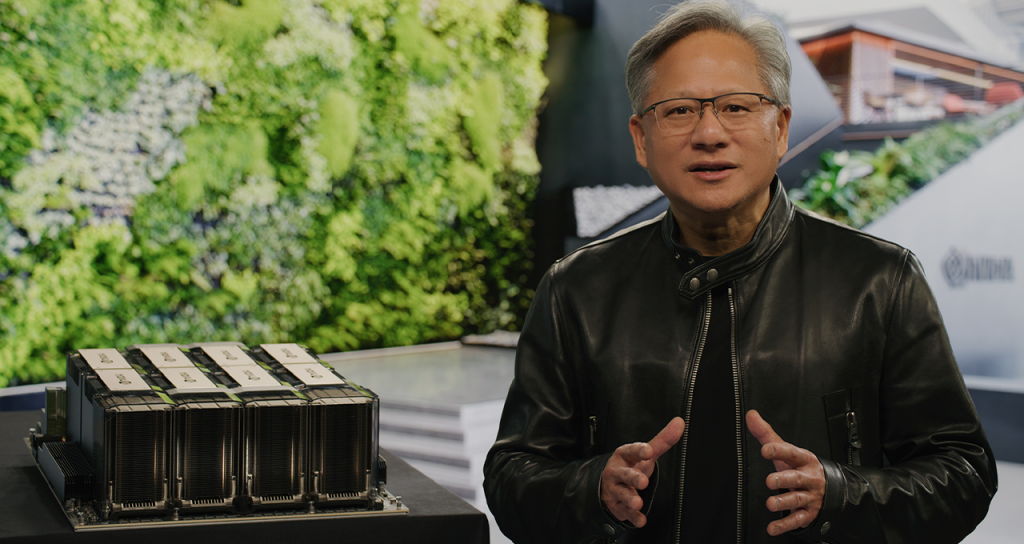

Nvidia DGX Cloud:

Nvidia DGX Cloud – Last week, we learned — from Bloomberg — that Microsoft spent hundreds of millions of dollars to buy tens of thousands of Nvidia A100 graphics chips so that partner OpenAI could train the large language models (LLMs) behind Bing’s AI chatbot and ChatGPT.

Don’t have access to all that capital or space for all that hardware for your own LLM project? is an attempt to sell remote web access to the very same thing.

Nvidia GPU Technology Conference:

Announced today at the company’s 2023 GPU Technology Conference, the service rents virtual versions of its DGX Server boxes, each containing eight Nvidia H100 or A100 GPUs and 640GB of memory. The service includes interconnects that scale up to the neighborhood of 32,000 GPUs, storage, software, and “direct access to Nvidia AI experts who optimize your code,” starting at $36,999 a month for the A100 tier.

Wonder how much Nvidia pays Microsoft to rent its own hardware to you.

Meanwhile, a physical DGX Server box can cost upwards of $200,000 for the same hardware if you’re buying it outright, and that doesn’t count the efforts companies like Microsoft say they made to build working data centers around the technology.

It’s even possible some of the GPUs you’ll be borrowing might be the exact ones Microsoft used to help train OpenAI’s models — Microsoft Azure is one of the groups that will be hosting DGX Cloud. However, Nvidia says customers will get “full-time reserved access” to the GPUs they’re renting, no need to share with anyone else. Also, Oracle will be the first partner, with Microsoft coming “next quarter,” and Google Cloud will “soon” host the platform as well.

Nvidia says Amgen is using DGX Cloud to hopefully and says insurance company cloud services provider CCC and IT provider ServiceNow are using it to train their AI models for claims processing and code generation, respectively.

Conclusion:

Nvidia has announced the launch of DGX Cloud, a service that rents virtual versions of its DGX Server boxes, including Nvidia H100 or A100 GPUs and 640GB of memory, for users who do not have access to the capital or space for their own large language models (LLMs) project. The service also provides interconnects that can scale up to 32,000 GPUs, software, and direct access to Nvidia AI experts who optimize user code, starting at $36,999 a month for the A100 tier.

The service has attracted companies like Amgen, CCC, and ServiceNow to train their AI models for drug discovery, claims processing, and code generation, respectively. Heck, you might be renting the exact same GPUs that Microsoft used to help train ChatGPT and Bing.

If you’re a parent or guardian looking to get your kid a new phone, look no further! Clean Phone allows you to manage your kid’s phone from anywhere. It offers you complete parental control and tons of amazing features. You can easily pick and choose which apps and games your child can use! It supports complete phone monitoring, including phone call and SMS usage. You can even control how long and how often your kids can use apps or the internet. You can also check and manage their call logs, SMS logs and screen time. Over 1000 parents have mental peace because of clean phone.